Composer and current SEAMUS Member At Large Per Bloland discusses the genesis and evolution of the EMPP, from its inception at CCRMA through various stages of development, up through its most recent incarnation as the focus of a research project at IRCAM. Bloland responded to the following questions, posed by the editor Steven Ricks, via email.

How did the idea for the “Electromagnetically-Prepared Piano” occur to you?

Back when I was serious about my trumpet playing I would, as you might expect, spend many hours in practice rooms, most of which had pianos. I started to notice and really enjoy the way the high undamped strings of the piano responded to my playing, and began holding down the damper pedal while practicing. This led to an early piece of mine, Thingvellir, for solo trumpet, in which the trumpet plays into a microphone connected to a small amplifier. The amp is placed under a grand piano, as close to the soundboard as possible, and the damper pedal is propped down allowing the strings to vibrate sympathetically. The result is similar to a long-tailed reverb, but has its own quite distinct quality. Luckily at the time I was blissfully unaware of Berio’s trumpet Sequenza, or I might have scrapped the whole thing. I liked the effect though, and started to think about how to make the whole system louder and improve the detail of control.

During my first year at Stanford I took a class at CCRMA, and happened to be discussing some ideas with some of the other CCRMA-lites at lunch one day. I mentioned two possibilities – either placing electromagnets over piano strings, or attaching many tiny speakers to the frame at the nut, each pointing to a string complex (the speaker idea, by the way, terrible idea). Steven Backer was there, and became very interested. He immediately saw possibilities for working with electromagnets that had never occurred to me. I had been thinking of the EBow model, in which there is little control over timbre. He realized that supplying the electromagnets with an arbitrary audio signal (rather than using feedback, as the EBow’s do) would open a huge range of timbral possibilities. He also knew of another student, Edgar Berdahl, who was working on similar issues at the time, and began discussing the project with him. This led to a fantastic and rather rushed collaboration, the result of which was the Electromagnetically-Prepared Piano (EMPP), version 1.0. I was in the process of working on a piece with a set performance date (an ASCAP/SEAMUS commission, it so happens), and decided that the piece would utilize the yet-to-be invented device. Once we had one electromagnet working I was able to glean some idea of what to expect. It was, nonetheless, all rather speculative until the device was fully built. Which it was, I am happy to say, in plenty of time for the performance.

What grants/resources/individuals made its creation possible?

The initial collaboration between myself, Steven and Edgar was the definitive instigation of the project. That first version of the device, created back in 2005, was funded by CCRMA. It carried me through years of demonstrations and performances, and is still in perfect working order. It does have two problems though. First, the rack used to support the electromagnets over the piano strings is difficult to manage. I would often find myself spending two full hours adjusting and tuning the device before a performance. The rack was constructed (mostly by myself – that was one thing technically simple enough for me to handle) from parts purchased at a hardware store, and was less than ideal. The second problem involved power. That first version was designed to avoid melting electromagnets, which would be rather disastrous in the middle of a performance. But this power limitation resulted in a corresponding volume limitation. Exciting the lower partials was often quite effective, and the resulting sound quite loud. However with higher partials, if an electromagnet happened to be above a node of the partial it was trying to excite, very little sound would result. This was very difficult to predict and often impossible to correct. I learned to live with pitches being absent from a given performance. The number of missing pitches was also inconsistent between pianos, and could have an impact on the overall volume of the system. The problem is caused by the fact that the nodes of higher partials are simply more closely spaced and therefor more difficult to avoid. More powerful electromagnets would allow me to overcome this problem by brute force. The trick was to find an amplifier with just the right amount of power for the new electromagnets.

At some point I became aware of the work that Andrew McPherson has done with his Magnetic Resonator Piano, which is in many ways similar. We began discussing our systems and I discovered that he, having run into the same problem of power, had designed a more powerful amplifier board and matched it with larger electromagnets. He subsequently had one built for me following his design, which I am currently using. The one drawback, as I soon discovered, is that it is now quite easy to melt the electromagnets. Despite the fact that an electromagnet coil melting over a piano string makes a truly wonderful sound, the cost of replacement is prohibitively expensive. (I should also mention that even if that should happen, and it has only happened once, it’s just the coil inside the metal casing that melts. No piano has ever been harmed! Really!)

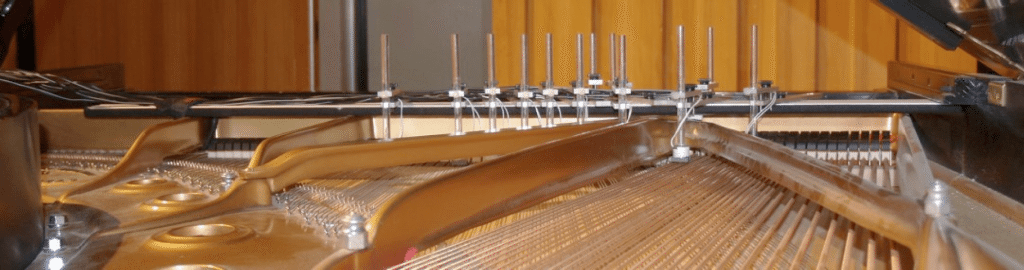

The problem of the rack was solved last year, when I arrived at my current position at Miami University (yeah, it’s in Ohio) and discovered an amazing resource: the Miami University Instrumentation Lab. The name is a bit misleading for a musician, but a chemist would probably understand it immediately – they design and build a variety of devices for laboratories across the campus. I approached them with the inefficiencies inherent in my rack, and they designed and built an alternative system. The improvement still surprises me every time I set it up.

At the risk of delving into the minutia of the project, I thought I might provide some details about these improvements. First of all, when I refer to the rack, I mean specifically the hardware that is designed to suspend the electromagnets (which are metal cylinders about an inch in diameter) above the piano strings. The following might make more sense after referring to some photos. Details on the new system can be found here: http://magneticpiano.com/?page_id=79

and the old system here: http://magneticpiano.com/?page_id=76

Both systems rely on a bar that rests on the piano frame, to which much smaller brackets are attached that reach out over the strings. Each electromagnet is suspended beneath its bracket by a bolt. Designing the details of this system is tricky though, as each electromagnet must be adjustable in a wide variety of ways. It must be adjustable: 1) over the full range of the piano, 2) along the length of its string (to some degree, the more than better), 3) in terms of its height, or distance from the strings (the most important one), and 4) in terms of its rotation. The main problem with the first system involved the height adjustment. A long threaded bolt suspended each electromagnet beneath its bracket. These bolt were held in place on the bracket by two opposing wing nuts. So to move the electromagnet up or down, one wing nut had to be loosened and the other tightened. Over and over again. And then back again because I probably went a bit too far. It was maddening. The new system has an adjustable finger screw on the top of the bracket that adjusts the height easily without changing the rotation of the electromagnet. A simple twist of the screw and I am done, it’s really quite amazing.

What musical possibilities interested you most with the EMPP? How did you explore them in the pieces you’ve composed for it?

A large part of the appeal lies in the fact that the resulting sounds are fundamentally acoustic. The only source of sound is vibrating piano strings (coupled, of course, with the rest of the piano). As a matter of fact at the time I was writing that first piece, I was growing rather weary of composing electronic music in general. Fulfilling a SEAMUS commission with an acoustic piece offered the perfect solution! I am well past that weariness now, but am still very much drawn to the re-appropriation of acoustic instruments, especially ones as hallowed as the grand piano. The effect of seeing and hearing the instrument live is much more compelling, I think, than simply hearing a recording. Part of this surely involves the radiation pattern inherent in such a large acoustic system. Another part, perhaps more significant and certainly more difficult to explain, involves our expectations and the way we filter information. We know what a piano does. It’s very easy to relax into our expectations about the inherent timbral limitations of such a familiar instrument. The use of extended techniques, for example the wide variety of sounds that can be generated by playing inside the piano, challenges this comfortable familiarity in ways that I find extremely appealing. The EMPP is simply an extension of this process of defamiliarization, but one that has the potential to remove the instrument almost entirely from the realm of “pianistic.” It forces us to reconsider the piano for what it is in a physical sense, in all its amazing complexity, rather than rely on our existing conceptions of that which is piano.

The first piece I composed for the device (Elsewhere is a Negative Mirror) was for solo piano and electromagnets, the second (Negative Mirror Part II) for ensemble and piano with electromagnets. Both followed the same basic plan, and were conceived as part I and II of a set. In these pieces the piano part is written using more or less standard notation, and the performer plays both on the keyboard and inside the piano, but doesn’t physically interact with the electromagnets. The electromagnets are controlled by a computer programmed to move through a variety of “scenes.” The performer advances the software through the scenes with a foot pedal, but otherwise has no control over the sounds that result from the electromagnets. In a given scene, there might be 7 or 8 strings actuated at a time, out of the twelve possible. Those strings are simply excluded from the pool of pitches available to the performer on the keyboard, so a key is never struck while its string is being actuated. The result of all this is that the piano essentially has two performers with distinct parts – the human and the computer. These parts are related, but no more so than say the piano and the flute part.

The third piece, Of Dust and Sand, was written for alto saxophone and piano with electromagnets. I was interested in having the performer interact with the electromagnets directly, in coming up with a more integrated system. In this piece, the top seven electromagnets are active for the duration, constantly attempted to vibrate their respective strings. The role of the performer is to dampen this vibration using their fingertips. To do this, the performer stands and leans over the open piano frame, pressing on the actuated strings between the nut and the frame. To play the notes indicated in the score, the performer lifts the appropriate finger, allowing the string to sound. The system thus becomes an anti-piano – a note sounds when a finger is lifted. However the process of damping is imperfect. The electromagnets are powerful enough that sound leaks through even when there is a great deal of finger pressure on the strings. One particularly nice outcome of this setup is that it becomes fairly easy to create gradual crescendos and decrescendos, just by reducing or increasing the pressure, respectively. A piece of paper also lies across the actuated strings, giving the sound a nice reedy quality. The resulting sound is difficult to identify, though I think it is clearly acoustic. It also blends quite well with the saxophone.

How did you find out about the fellowship opportunity at IRCAM, and why did you think it was a likely possibility to extend your work on the EMPP?

(it seems that I have answered a slightly different question below, more about what is the Musical Research Residency and how did I learn of it. The second part of your question, about why I thought the EMPP was a good fit for the residency, went into the question after…)

The program was called the Musical Research Residency, though this year they have expanded it to the Musical and Artistic Research Residency Program. It is directed by Arshia Cont, who is involved with a variety of really interesting things over there. My understanding is that the original purpose of the residency was to provide an alternate avenue for composers to become involved with IRCAM, and a way for the people at IRCAM to reach out to a wider variety of composers. The traditional compositional route through IRCAM entailed moving through the two years of the Cursus, after which one might remain connected in various ways such as proposing a commission. Unfortunately spending the 1-2 years in Paris to participate in the Cursus was never really an option for me. The Research Residency was designed to allow composers such as myself, who may have had no previous direct contact with IRCAM, to spend time in house working directly with the researchers. It’s a remarkable program, and really an amazing opportunity to work with some incredible people doing incredible things. I’m not sure how the newly expanded purview will affect the central goals, though it’s clearly no longer targeted only at composers.

I don’t remember exactly when I first learned of the program but I suspect it was through one of the many mailing lists out there. It’s still a new program, with its first residency beginning in 2010. As I looked into it I discovered that I knew of couple of the people who had come through it already or were currently there. Ben Hackbarth was in residency the first year, and Rama Gottfried was there while I was applying in 2011. I reached out to both of them to get advice, and they were both extremely helpful, providing me with advice about the current research interests of the various teams and about the general culture there.

Can you give some specifics about the application (process?)–what were they looking for? What specific compositions or information did you send? Why do you think your application was successful?

Regarding the creation of my proposal, I went through several generations of ideas. I was more or less sure from the beginning that my project would involve the EMPP since that is the most prominent area of my current research. Based on the advice I received and my general sense of the purpose of the program, I assumed they would be more interested in something related to their existing software, rather than say a project involving modifying my physical device. I toyed with several possibilities, such as the creation of a software-based self-tuning system (which would adjust the frequency of the signal being sent to the electromagnet – more complicated than it sounds!), or some kind of elaborate Max patch to allow non-programmers to easily control the device. Ultimately the project I proposed involved the creation of a physical model of the interaction between and electromagnet and a resonating body (an abstract can be found here: http://www.ircam.fr/1046.html?&L=1). This had a great deal of appeal to me for a number of reasons. It would allow me to investigate the physics of this incredibly intriguing interaction: an electromagnet exciting a string. I would clearly need to bring the device there in order to be able to measure its response, which would also allow me to introduce it to the IRCAM community. It also allowed me to build on their existing physical modeling software: Modalys. I had some experience programming in Common Lisp, which is the control language for Modalys, and I was eager to jump back in and improve my skills.

I think the project appealed to IRCAM because it fit in rather well with the current activities of the Instrumental Acoustics team. Adrien Mamou-Mani, for example, is doing research on the physical alteration of traditional instruments, allowing acoustic systems to be transformed electronically – a perfect fit. Joël Bensoam and Robert Piéchaud, on the other hand, focus on the virtual side of instrument manipulation, working primarily with Modalys. I though it might be interesting to them to examine in detail the physics of an acoustic system involving electromagnets. The creation of a physical model seemed like a perfect way to do this, and I just happened to have a complex system available for analysis and measurements.

As I developed the proposal the biggest unknown was whether Modalys already incorporated something like an electromagnetic interaction. Being completely unfamiliar with the software, I wasn’t sure if my proposal would even make sense in the context of its capabilities. Fairly early on in the process I contacted Joël, who I ended up working closely with over the course of the residency, and asked him about my proposal-in-progress. Joël is a physicist and serves as the primary researcher on the algorithms used by Modalys to calculate the interactions between virtual objects. His response was very helpful, and conveyed his initial interest in the project. I was of course sure to mention that in my proposal.

For the application itself, I spent some time refining the proposal and working out the details, as best I could, of how the project would unfold. It is necessary to pick a duration, which is very tricky if one is unsure of exactly how the research will progress. My duration was based more on practical concerns and a desire to be there for as long as possible than on a project-based timetable. I was very glad that I asked for as much time as I did (I was there for 5 months), as it takes quite a bit of time to develop these kinds of projects. We did manage to complete the research and develop the physical model, but at the end I felt like we were just getting to the really interesting part. It certainly could have gone on for longer and continued to be productive.

I’m sure the project proposal itself is important in the success of one’s application, but I know there are other factors. At the time I applied I was asked to submit a Curriculum Vitae, a work plan, and two samples of prior work. They are understandably eager to select people with the skills and motivation to complete the project they have proposed, and I know they evaluate the prior experience of the applicants to get a sense for their chances for success. Selecting which samples of work to submit is always challenging. I knew I wanted to submit at least one electromagnet piece, and Of Dust and Sand is both the most recent and in my opinion the most successful. The second submission was less obvious, though in the end I did submit another piece with electromagnets, Negative Mirror Part II. As I recall this decision was based more on the aesthetics of the piece than on its use of electromagnets.

IRCAM has a strong reputation as a sort of Mecca for electronic music, and yet also seems mysterious and impenetrable to people from the outside (perhaps esp. to American composers?)–can you describe your experience in working there? How has it been? Would you encourage other composers to pursue working there? What should composers know about or prepare for to be competitive in applying for opportunities there?

I would absolutely encourage anyone who is interested to work there. My experience was really amazing, both in terms of the research with which I was directly involved and the connections I made, within and outside my immediate team. Having access to their knowledge base was a remarkable opportunity. I found everyone to be extremely friendly and very helpful. That being said, it is daunting to enter such a complex and potentially mystifying environment. My French is very limited, which in many ways was not a problem as the people I was working with could speak English quite well. Nonetheless it did make it somewhat more difficult to navigate the system, and to understand what was expected of me in general terms. The specific expectations were clear, but there are so many intangible factors that come into play when dealing with what is essentially a micro-society. Not to mention the larger society of Paris, with all of its complexities of interaction.

I’m not sure how representative my personal experience is of these residencies, but I can certainly explain a bit about the trajectory of my stay. As I mentioned, my project lasted for 5 months. We arrived in the first week of January, and I got started at IRCAM in the second. My first tasks were to learn Modalys, re-learn Common Lisp, and generally get to know my way around. I also gave a kickoff presentation in my 3rd week there, which was a general way of introducing myself and my work to everyone. It wasn’t really until after the presentation that we started planning out the details of the project.

I did have a moment of concern early on. As I told my new colleagues about my project, and especially after my kickoff presentation, a number of people suggested that Modalys could already do exactly what I was proposing to implement, through the use of something called a force connection. If that were true I would have a great deal of free time over the course of my residency, but that didn’t seem like a good way to spend my time. It turned out that there was a fundamental difference between the force connection and what we were creating, called the induction connection, but it took a while to be sure!

The first thing to do was take measurements. There were a couple of issues that Joël wanted to investigate right off the bat. First, according to his calculations, when an electromagnet was placed over a string and a signal of a given frequency introduced to the electromagnet, the string should vibrate at twice the frequency – an octave up. In my system there are permanent magnets on the sides of the electromagnets, which Steven Backer and Ed Berdahl added for the purpose of cancelling this effect, causing the string to vibrate at the same frequency as the input signal. Joël wanted to measure the specific additional effects of intruding the permanent magnets. Second, he was intrigued by the fact that a string under the influence of an electromagnet in a grand piano will vibrate both vertically and horizontally. The same is true when it is struck by a hammer, but in that case there is inevitably some lateral motion introduced by imperfections in the mechanical system. Electromagnets, on the other hand, are theoretically incapable of exerting a horizontal force – the string should just vibrate up and down. The trick is the piano body, which creates an incredibly complex system of interactions. In an effort to examine a string in isolation, meaning removed from the complexities of a piano, a different suspension system was necessary. Alain Terrier, whose title is “Technician” but is involved in a variety of research there, came to the rescue. He built a monochord out of steel girders that would theoretically provide fixed termination points, unlike the bridge of the piano which is designed to vibrate in sympathy with the strings. We tested it again, and strangely enough it continued to vibrate in both modes. For a minute we thought we had discovered some new type of interaction. But then we attached a couple of heavy clamps to anchor the girder to the table, further reducing the vibration of the anchor points, and we were left with almost no horizontal vibration.

The next step involved making what seemed like thousands of measurements of the string response under a variety of inputs. Joël and I would get together to determine what he needed to know in order to develop his master algorithm, and I would lock myself in the lab with the monochord and record its responses. This kind of experimentation was completely new to me, and it took a while for me to get it right. Often while running the experiments some minor detail would nag at me (should I keep adjusting the height of the electromagnet? It adds another variable to the experiment, and I’m not keeping track of it at all, but I’m sure it’ll be fine…). Sure enough, when I presented the results to Joël, he would ask about that very thing. It seems rather obvious in retrospect, but it turns out that when running an experiment you can’t just change variables and not keep track of those changes. So then I’d have to run the tests all over again. It was actually rather fun, though certainly tedious. I did get much better at it, though I occasionally felt like I was wasting a great deal of my time and his. But Joël was always very encouraging, and never seemed bothered by having to offer me that kind of guidance.

In the meantime, I was doing research about the many complex interactions that go into creating the distinct sound of a piano. I knew that before long Joël would have an algorithm for me to work with, and that I would need to compare the sonic results of that algorithm with my own knowledge of how an electromagnet should sound in a piano. My task during that time was to try to replicate as many of the physical interactions in Modalys as was practical. It’s very easy to create a string in Modalys, but pretty much impossible to replicate a piano. Dealing with the bridge alone is incredibly processor intensive – it is just one piece of wood, but it interacts with hundreds of strings as well as a large soundboard with its own set of modes. The strength of those couplings, and thus the rigidity of the bridge, is rather difficult to measure exactly. This factor determines the strength of the couplings between the 2-3 strings of a pitch complex, and their interactions are incredibly complex as well. So I set about the hopeless task of building a piano. That part of the project alone was fascinating. René Caussé, the head of the Instrumental Acoustics team, and Adrien Mamou-Mani offered invaluable assistance with this, as well as many other aspects of the project.

Once Joël had arrived at an initial algorithm, Robert Piéchaud implemented it in Modalys, creating a branch of code for me to work with. Robert and I then ran through a testing cycle to catch any bugs that might be lurking in the code. Once it was stable, I then ran the same experiments on the virtual string as I had on the monochord. In some ways this was easier as it was much more controlled. In others it was just as challenging. Modalys takes into account interactions that aren’t always obvious to the user, and it can be incredibly difficult to truly isolate a given factor.

The big question that loomed throughout this work had to do with the usefulness of the final results in Modalys. Was it in fact going to sound any different than the force connection I mentioned above? If not, the project wouldn’t have been a waste of time since Joël had learned a great deal about the physics of electromagnets, and I had learned an incredible amount about every aspect of the research. But it certainly would have been disappointing. I have a very strong memory of sitting at my desk the night before my exit presentation, which would be open to all of IRCAM and the general public. I had the final algorithm from Joël, and had tested it in a variety of ways. The time had come for me to generate some example audio files. As I did this, I decided to compare the results generated using the new algorithm to those generated with the old force connection. They sounded the same! It was one of those sleep-deprived moments of panic when so much work seems (momentarily) to be for naught.

Of course I kept experimenting into the night and uncovered a variety of ways in which is it quite different indeed, and even managed to come up with some beautiful sounds. The trick was to increase the strength of the electromagnets until the displacement of the string was well outside the reasonable range, at least for a piano. Because the force exerted by an electromagnet is so dependent on its distance from the object under its influence, introducing a large variation into that distance significantly changes the resulting vibration pattern. When a string is displacing by say 6 inches, an initial sinusoid can be distorted in ways that can be fairly unpredictable over time. I still have a good deal of experimenting to do with the induction connection to fully exercise its capabilities, but at least I’m confident that the results can’t be replicated with a force connection!

Perhaps that is an overly verbose explanation of my project, but I thought it might be interesting for others to hear some details about how such a thing might unfold. I would like to offer one final piece of advice to those considering applying for the residency – do lots of homework. Look into what types of research are currently being conducted. There is quite a bit of information on the website, but of course it is difficult to tell how current it is. Also do as much listening as possible. There is certainly a wide range of aesthetics that is represented at IRCAM, but like any institution it is not infinite. I also recommend contacting the relevant researchers before the application is complete to see if a given project might be of interest to them. As with any initial contact with busy professionals, be as concise and articulate as possible – consider it as part of your formal application. It really is a terrific opportunity; I’m thrilled that I had the chance to spend the time I did over there. I would highly encourage anyone interested to give it a go and apply!

For anyone interested in more information on the EMPP, I am in the process of creating a website, which can be found here:

I plan to post the Common Lisp code I wrote for Modalys one of these days, but that may take a bit of time to get together. In the meantime there is still much to be found there!